The impact of packed malware is discussed more thoroughly by Tzi-cker Chiueh of Symantec Research Labs in a 2007 presentation on the difficulties in detecting malware, where he devotes 5 slides to the "Packer Problem". Packing adds another dimension to the production of malware signatures which must now account for potentially ten or more layers of packing that obscure the real code that needs to be scanned. Symantec knows of over 1000 different packing programs all of which need to be recognised and stripped off to apply signature scanning.

But is it possible to distinguish between suspicious and actual packed malware using statistical properties of the files without unpacking?

Three authors (Tim Erbinger, Li Sun & Serdar Boztas) have done some interesting research into developing specific randomness tests for detecting packed malware. As it turns out, malware does exhibit a definite randomness signal when the randomness measure accounts for local patterns and structure.

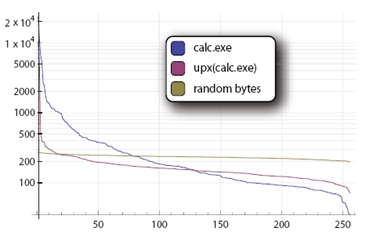

The paper begins by observing that common randomness measures, such as the entropy or other statistical tests, compute a global measure of randomness over all available data and tend to obscure (local) sections of code that may be highly random or highly structured. The figure below shows the distribution of bytes in the program calc.exe before and after packing with UPX. The distribution becomes more uniform after packing, but still far from uniform.

The authors proposed several randomness tests that preserve locality (structure or lack thereof), based on constructing a Huffman tree at the byte level of the data (packed file). The Huffman tree algorithm computes codes for each observed byte where more frequent bytes are assigned shorter codes. If the data is random, causing the byte frequencies to be similar, then the Huffman tree codes will be roughly of similar length. Structured data on the other hand will produce codes of quite differing lengths.

The authors then sample the data at various bytes positions, collecting the corresponding Huffman codes, and then normalise the code lengths between 0 and 1 (where a higher value means more random). The byte sampling strategies proposed are (1) sample a fixed number of bytes equally spaced in the data (2) slide a window of fixed size across all the data. Below we see the sliding window test applied to the basename binary from UnxUtils before and after being packed with FGS 2.0.

UnxUtils has 116 binaries ranging in size from a few KB to about 200 KB, and the authors performed a collection of experiments to validate their local randomness tests using 6 well-known packers.

The authors observe that there is a definite characteristic signal of packed files. They go onto to further propose additional tests that can can be used to discriminate between packers.

Returning to Tzi-cker Chiueh and his packer problem, he suggests to workaround packing by Just-in-Time scanning approach which tries to scan a file just before it runs in its unpacked form, and also to consider whitelisting. Perhaps this new local randomness test from Erbinger, Sun & Boztas can help him out.

Related Posts

2 comments:

Emensuits give discount in all their suits products such as mens overcoats,man suits,formal wear,slacks,dinner jackets and many more.

Post a Comment