Pictured left is Dan Egerstad, a 21-year old Swedish computer security consultant (at times also called a hacker, researcher and other colourful titles) who approaching a year ago now ran afoul of various authorities for his involvement in the exposure of account details harvested from the Internet-based anonymity service Tor. He obtained password information for 1,000 e-mail accounts belonging to foreign embassies, corporations and human rights organizations, and posted some details to his web site. For his trouble he was taken to the local police headquarters for questioning, and various computing equipment and written material were seized from his domicile. Egerstad claims he only wanted to bring attention to the inherent weaknesses in Tor that he expects are not well-known to its users. Another case of irresponsible disclosure or over-zealous awareness? Before examining the details of the Egerstad case we need some background on anonymity (the research material used to write this article was collected in Freemind and is available as a flash web application here).

Pictured left is Dan Egerstad, a 21-year old Swedish computer security consultant (at times also called a hacker, researcher and other colourful titles) who approaching a year ago now ran afoul of various authorities for his involvement in the exposure of account details harvested from the Internet-based anonymity service Tor. He obtained password information for 1,000 e-mail accounts belonging to foreign embassies, corporations and human rights organizations, and posted some details to his web site. For his trouble he was taken to the local police headquarters for questioning, and various computing equipment and written material were seized from his domicile. Egerstad claims he only wanted to bring attention to the inherent weaknesses in Tor that he expects are not well-known to its users. Another case of irresponsible disclosure or over-zealous awareness? Before examining the details of the Egerstad case we need some background on anonymity (the research material used to write this article was collected in Freemind and is available as a flash web application here).

When we speak of anonymity here we mean anonymity with respect to a digital action, such as sending an email, paying a bill or browsing a site. Such actions are considered to be anonymous if they can be attributed to nobody or attributed to everybody. In practice it is very difficult to ensure that digital actions are attributed to nobody since Internet protocols are very generous in the disclosing information as part of their operation. On the other hand, building systems that attribute actions to everyone is also impractical. Deployed internet anonymity solutions take the approach of making digital actions attributable to a sufficiently large set of users. So while it would be nice to have an anonymous email system whose messages could be attributed to anyone on the planet (once they all have email), the sender may feel that their anonymity is sufficiently protected if the system renders their email potentially attributable to a million people who were online when the email was sent. Anonymity loves a crowd, as the saying goes.

We will now give a short overview of how two well-known anonymity services work, and then get back to the transgressions of Mr. Egerstad.

Dealer-Based Systems

In dealer-based systems, we imagine a large number of people sitting around a big table, with one person, Dixie, distinguished as the dealer. Each person at the table can send or receive a message from someone else at the table. When Alice wants to send a message to Bob, she puts the message in an envelope, writes Bob's name on the front, and passes the addressed envelope face down to Dixie. Dixie accepts the envelope from Alice, and continues collecting envelopes from people until she collects a pre-determined number of messages, let's say 100. Dixie then shuffles the 100 envelopes until their order is random as compared to the order in which they were received, and she then deals the envelopes out as they are addressed.

In dealer-based systems, we imagine a large number of people sitting around a big table, with one person, Dixie, distinguished as the dealer. Each person at the table can send or receive a message from someone else at the table. When Alice wants to send a message to Bob, she puts the message in an envelope, writes Bob's name on the front, and passes the addressed envelope face down to Dixie. Dixie accepts the envelope from Alice, and continues collecting envelopes from people until she collects a pre-determined number of messages, let's say 100. Dixie then shuffles the 100 envelopes until their order is random as compared to the order in which they were received, and she then deals the envelopes out as they are addressed.

Someone at the table watching all this, say Eve, learns that Alice gave a message to Dixie, and that one of the people who was dealt an envelope by Dixie was her intended recipient (which must include Bob). So the anonymity of Alice's receiver might be said to be 1-in-100 assuming that the 100 messages collected by Dixie were delivered to 100 different people. If Bob is a popular fellow then he may have 10 messages dealt to him by Dixie, and the anonymity of Bob as Alice's receiver is reduced (there are fewer possible receivers to consider).

Here the value of 100 is called the threshold, and reaching the threshold number of messages triggers Dixie to shuffle and deliver. Alice may search for another table where the dealer Debbie has a threshold of 1000 say, because she wants to hide her recipient amongst a larger set of people. Alice will wait longer for her messages to be delivered since it will take Debbie more time to collect her threshold of 1000 envelopes as compared to Dixie's 100. There is tradeoff here between anonymity and latency: that is, how many other messages your message is shuffled with (the size of the threshold) and the delay to deliver of your messages (waiting for the dealer to gather the threshold).

The dealer can pull other tricks. A dealer Dylan may have a threshold of say 500 messages but on the first collection he actually accepts 600 envelopes. The 600 envelopes are shuffled, but he puts aside 100 (called the pool) and then delivers the other 500. The next 500 received messages are shuffled with the current pool, 100 set aside for the next pool, and the remaining 500 messages delivered. The pool breaks the direct correlation between when Alice submits her message and when it is dealt by Dylan. Eve will need to consider more potential receivers to determine who Alice might be communicating with.

What we have described here as dealer-based systems gives the simple intuition behind MIX anonymity systems, originally proposed by David Chaum, a prolific and influential cryptographic researcher. Chaum's seminal ideas have been greatly elaborated on in the last 20 years or so, and for a practical internet service providing anonymity based on the MIX principle, please see JonDonym.

Lotto-Based Systems

The other class of anonymity systems we will consider shall be referred to as lotto-based systems (these are the ones that got Mr. Egerstad in hot water). We are proceeding by analogy as before with dealer-based systems. In the game of lotto players are attempting to predict which balls will be selected by a mechanical process that seems very random. A collection of numbered balls (say 40) are released into a clear and hard plastic sphere or cylinder, which when rotated, causes the balls to bounce around in an unpredictable manner. After a "sufficiently large" number of revolutions, an arm reaches into the sphere and catches one of the balls as it is bouncing around. The selected ball becomes the first number. The arm then reaches in again, catches another ball, and so on, until the required number of balls to complete the game are selected (say 6). All the time the sphere is rotating, and the remaining balls bounce off each other and the hard surface of the sphere.

The other class of anonymity systems we will consider shall be referred to as lotto-based systems (these are the ones that got Mr. Egerstad in hot water). We are proceeding by analogy as before with dealer-based systems. In the game of lotto players are attempting to predict which balls will be selected by a mechanical process that seems very random. A collection of numbered balls (say 40) are released into a clear and hard plastic sphere or cylinder, which when rotated, causes the balls to bounce around in an unpredictable manner. After a "sufficiently large" number of revolutions, an arm reaches into the sphere and catches one of the balls as it is bouncing around. The selected ball becomes the first number. The arm then reaches in again, catches another ball, and so on, until the required number of balls to complete the game are selected (say 6). All the time the sphere is rotating, and the remaining balls bounce off each other and the hard surface of the sphere.

Imagine now that instead of adding 40 balls at the beginning to the sphere, we keep on adding new balls, for example at the rate of a few per second. Also imagine that the arm does not just pull out 6 balls but rather it continuously extracts one ball at a time. We might think of this setup as the perpetual lotto system with balls continuously entering and leaving the system. If we put a ball into the sphere, how long would it take to come out? If we took the numbers off the balls (let them all just be white balls), when would we know that a given ball has come out?

These principles underpin several anonymity systems, such as Crowds. We replace the balls with messages and the rotating sphere becomes a collection of servers that randomly route (bounce) the messages amongst themselves before delivering the message to the intended recipient. In dealer-based systems the anonymity of Alice's receiver was tied to the size of the threshold used by the dealer. In lotto-based systems Alice's message is potentially mixed with all messages in the system at the same time, which may be a large depending on the traffic rate into the system.

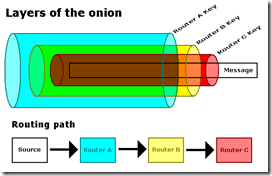

Tor - The onion routerTor is a particular, and seemingly popular, implementation of an anonymity network that falls into our simple lotto-based classification. Tor is short for "The onion router", and is considered to be a second generation version of onion routing for anonymous communication (onion routing 2.0 in common parlance). Here an onion refers to a message that is encrypted multiple times, or in layers. When Alice wants to send a message she first chooses a path through the Tor network based on a list of available servers. So instead of her message bouncing around randomly in the system (as in Crowds), she actually chooses the route before submitting the message. To protect her selected path she encrypts the message with the public key of the last server in the path, then encrypts that encrypted message with the key of the second last server in the path, and so on, for each server in her chosen path. The following diagram shows the process (see this wikipedia article for better resolution and more explanation).

Once all the layered encryption is completed, Alice sends her encrypted message (now called an onion) to the first server she selected in the path. The server decrypts one layer of the onion to reveal yet another onion and the next server in the path. The first server sends the onion to the second server in the path who decrypts the next layer and forwards the remaining onion to the next named server in the path, and so on. The final server in the path decrypts the onion one final time to reveal the original message and the intended recipient. The final server delivers the message to Alice's intended recipient.

Back to Dan

In Tor there are 3 roles for servers nodes: entry nodes that accept messages into the network, routing nodes that decrypt one onion layer and then forward the remaining onion, and finally exit nodes that deliver the message out of the network to the intended recipient. Any physical server in the Tor network can act in any of these node roles. What is revealed about Alice? Well the entry node sees her IP address, while the routing nodes learn the previous and next nodes in the path. However the exit nodes learns the message itself and the address of the recipient. If Alice wants this last hop to be secure then she must explicitly use a protocol like SSL to protect her message. But apparently this is a little known fact as Dan Egerstad demonstrated. This caveat is (now) clearly indicated on the Tor project site, and a general warning: Be smart and learn more. Understand what Tor does and does not offer. This list of pitfalls isn't complete, and we need your help identifying and documenting all the issues.

Servers can be donated to the Tor network to act as nodes, and currently there are about 1500 nodes. So Dan Egerstad created/donated 5 exit nodes and sniffed the traffic contents of these nodes as they forwarded traffic out of the Tor network. He was not targeting any specific users of Tor, only looking at the traffic that was routed to his exit node as part of the overall Tor protocol.

Surprisingly Egerstad obtained login and password information for over 1,000 e-mail accounts belonging to foreign embassies, corporations and human rights organizations. More specifically he harvested information on users from embassies belonging to Australia, Japan, Iran, India and Russia, and also accounts information belonging to the foreign ministry of Iran, the United Kingdom's visa office in Nepal and the Defence Research and Development Organization in India's Ministry of Defence. Egerstad ensured controversy (and more than that actually) when he published the login and password details for 100 select email accounts, using the justification that he felt it would be the most effective way to make the account owners aware that their communication had been compromised. Mr. Egerstad has stated that there is no security flaw with Tor - the real threat comes from user expectations that their message contents are being protected end-to-end by Tor, when in fact encryption is only applied to internal Tor network communication.

Lessons Learned

Mr. Egerstad has highlighted that Tor obfuscates the path that a message follows through the Tor network (protecting the sender), but confidentiality is not provided at the exit point to the network. Of course the Tor people have documented this property, but there is nothing like an incident to drive the message home. Users should also understand that the role of the exit nodes is still based on the honour system, for both delivery and respecting the privacy of message content.

The main threat addressed by the design of Tor is protecting the identity of Alice from eavesdroppers - that is, it should be difficult to correlate the identity of a sender through the routing of her messages in the Tor network. So if an attacker is targeting Alice they may have to compromise a large number of nodes (or introduce a large number of their own nodes) into the Tor network to trace a path back to Alice. This sounds like hard work. Mr. Egerstad has shown how to harvest account details by merely attaching a new exit node and eavesdropping on the Tor business-as-usual traffic that is routed through the node by user path choices. So while passive eavesdropping may not compromise a specific person like Alice, it can still reveal valuable security information for the attacker.

The research material used to write this article was collected in Freemind and is available as a flash web application here.

4 comments:

I learn from this story that you should always check for proper requirements definition. You're able then to check against them to give the assurance that a sufficient level of security is achieved.

Second point is that you might need to re-evaluate your existing systems for new vulnerabilities.

"However the exit nodes learn everything - the IP address of Alice (for a return message), the message itself, and the address of the recipient."

I believe this is incorrect. Since the "return message" would go back to the TOR exit node, as it is the node that sent the message), Alice's original IP address is never revealed.

Alice's identity, however, may be exposed in the application layer data, and that is what the core of the issue is all about.

Hello paerty,

I seem to recall reading that the IP address may be exposed, but you are right that it would not make sense. I have updated the statement.

I don't think that the core issue here is exposure of Alice's identity, but rather logon and password information to target systems (the recipient node) is exposed.

regards Luke

Sometime the security of computer depends on how it network.

Laby[big suits for men]

Post a Comment